The previous sections covered much of the mid‐level data theory required to be a great Azure data engineer and give you a good chance of passing the exam. Now it’s time to learn a bit about the processing of Big Data across the various data management stages. You will also learn about some different types of analytics and Big Data layers. After reading this section you will be in good shape to begin provisioning some Azure products and performing hands‐on exercises.

Big Data Stages

In general, it makes a lot of sense to break complicated solutions, scenarios, and activities into smaller, less difficult steps. Doing this permits you to focus on a single task, specialize on it, dissect it, master it, and then move on to the next. In the end you end up providing a highly sophisticated solution with high‐quality output in less time. This approach has been applied in the management of Big Data, which the industry has mostly standardized into the stages provided in Table 2.10.

TABLE 2.10 Big Data processing stages

| Name | Description |

| Ingest | The act of receiving the data from producers |

| Prepare | Transforms the data into queryable datasets |

| Train | Analyzes and uses the data for intelligence |

| Store | Places final state data in a protected but accessible location |

| Serve | Exposes the data to authorized consumers |

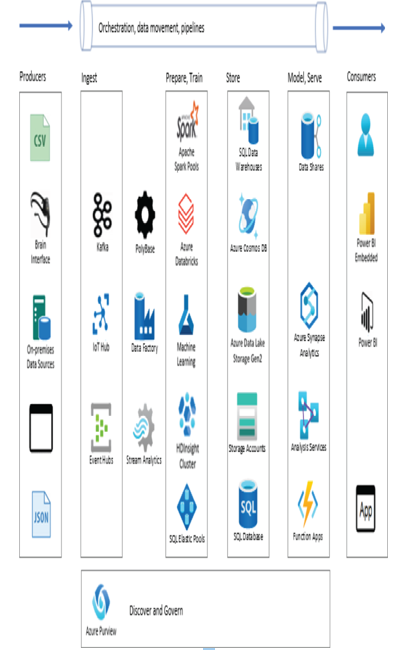

Figure 2.30 illustrates the sequence of each stage and shows which products are useful for each. In addition, Table 2.11 provides a written list of Azure products and their associated Big Data stage. Each product contributes to one or more stages through the Big Data architecture solution pipeline.

FIGURE 2.30 Big Data stages and Azure products

TABLE 2.11 Azure products and Big Data stages

| Product | Stage |

| Apache Spark | Prepare, Train |

| Apache Kafka | Ingest |

| Azure Analysis Services | Model, Serve |

| Azure Cosmos DB | Store |

| Azure Databricks | Prepare, Train |

| Azure Data Factory | Ingest, Prepare |

| Azure Data Lake Storage | Store |

| Azure Data Share | Serve |

| Azure Event / IoT Hub | Ingest |

| Azure HDInsight | Prepare, Train |

| Azure Purview | Governance |

| Azure SQL | Store |

| Azure Stream Analytics | Ingest, Prepare |

| Azure Storage | Store |

| Azure Synapse Analytics | Model |

| PolyBase | Ingest, Prepare |

| Power BI | Consume |

Some Azure products in Table 2.11 can span several stages—for example, Azure Data Factory, Azure Stream Analytics, and even Azure Databricks in some scenarios. These overlaps are often influenced by the data type, data format, platform, stack, or industry in which you are working. As you work with these products in real life and later in this book, the use cases should become clearer. For instance, one influencer of the Azure products you choose is the source from which the data is retrieved, such as the following:

- Nonstructured data sources

- Relational databases

- Semi‐structured data sources

- Streaming

Remember that nonstructured data commonly comes in the form of images, videos, or audio files. Since these file types are usually on the large size, real‐time ingestion is most likely the best option. Otherwise, the ingestion would involve simply using a tool like Azure Storage Explorer to upload them to ADLS. Apache Spark has some great features, like PySpark and MLlib, for working with these kinds of data. Once the data is transformed, you can use Azure Machine Learning or the Azure Cognitive service to enrich the data to discover additional business insights. Relational data can be ingested in real time, but perhaps not as frequently as streaming from IoT devices. Nonetheless, you ingest the data from either devices or from, for example, an on‐premises OLTP database and then store the data on ADLS. Finally, in a majority of cases, the most business‐friendly way to deliver, or serve, Big Data results is through Power BI. Read on to learn more about each of those stages in more detail.

Ingest

This stage begins the process of the Big Data pipeline solution. Consider this the interface for data producers to send their raw data to. The data is then included and contributes to the overall business insights gathering and process establishment. In addition to receiving data for ingestion, it is possible to write a program to retrieve data from a source for ingestion.